Penetration Testing

March 5, 2018

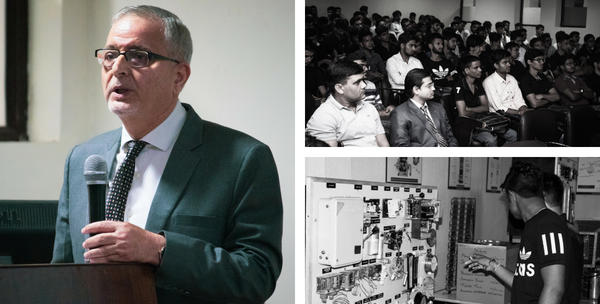

ORIENTATION PROGRAMME FOR B.TECH & BCA 1 st SEM STUDENTS

August 16, 2018Over the past five years, the authors and many others at Google have implemented hundreds of special-purpose computations that process large amounts of raw data, such as crawled documents, web request logs, etc., to compute various kinds of derived data, such as inverted indices, various representations of the graph structure of web documents, summaries of the number of pages crawled per host, the set of most frequent queries in a given day, etc. Most such computations are conceptually straightforward. However, the input data is usually large and the computations have to be distributed across hundreds or thousands of machines in order to finish in a reasonable amount of time. The issues of how to parallelize the computation, distribute the data, and handle failures conspire to obscure the original simple computation with large amounts of complex code to deal with these issues. And as a reaction to this issue an abstracting mechanism that allows parallelism, hide fault tolerance and work on distributed environment made google to come up with the MapReduce as a solution. In 2004, Google published the paper that introduced MapReduce to the world.12 Early in 2005, the Nutch developers had a working MapReduce implementation in Nutch, and by the middle of that year, all the major Nutch algorithms had been ported to run using MapReduce and NDFS. And till date progress on working and enhancing the technique is unstoppable.

MapReduce is a programming model for data processing. Hadoop runs MapReduce programs written in various languages like Java, Ruby, Python, and C++. Most important, MapReduce programs are inherently parallel, thus putting very large-scale data analysis into the hands of anyone with enough machines at their disposal. MapReduce algorithm has been used for applications such as generating search indexes, document clustering, access log analysis, and different other kinds of data analysis as discussed former. A MapReduce job is an access and process-streaming job that splits the input dataset into independent chunks (blocks) and stores them in Hadoop Distributed File System (HDFS). It has two main tasks Map and Reduces, which are completely in a parallel manner. The input phase gives input to the mapper in the <key, value> pairs and then mapper maps these inputs which are partitioned using user-defined partitioning function. The shuffling and sorting of data is made and send to the reducer where reducer then reduces it to optimized form and generates the output.

Programs written in this functional style are automatically parallelized and executed on a large cluster of commodity machines. The runtime system takes care of the details of partitioning the input data, scheduling the program’s execution across a set of machines, handling machine failures, and managing the required inter-machine communication. This allows programmers without any experience with parallel and distributed systems to easily utilize the resources of a large distributed system.

The MapReduce programming model has been successfully used at Google for many different purposes. The success of this method can be attributed for many reasons. First, the model is easy to use, even for programmers without experience with parallel and distributed systems, since it hides the details of parallelization, fault-tolerance, locality optimization, and load balancing. Second, a large variety of problems are easily expressible as MapReduce computations. For example, MapReduce is used for the generation of data for Google’s production web search service, for sorting, for data mining, for machine learning, and many other systems. Third, implementation of MapReduce has been developed that scales to large clusters of machines comprising thousands of machines. The implementation makes efficient use of these machine resources and therefore is suitable for use on many of the large computational problems encountered at Google.